Artificial intelligence is reshaping creative tools, but generating complete, coherent music has remained slow or limited — until now. ACE-Step 1.5 pushes the boundaries by offering an open-source, high-speed foundation model that makes professional-quality AI music accessible without costly hardware or long wait times. ACE-Step 1.5 brings together speed, control, and expressive output in a way that’s useful for creators, developers, and musicians alike.

ACE-Step 1.5 addresses a core pain point in AI music: the trade-off between generation speed and musical coherence. Older approaches either relied on slow large language models or diffusion models that struggled with long-range structure. ACE-Step 1.5 combines efficient hybrid architecture with semantic alignment so you can turn prompts into rich, structured music in seconds instead of minutes.

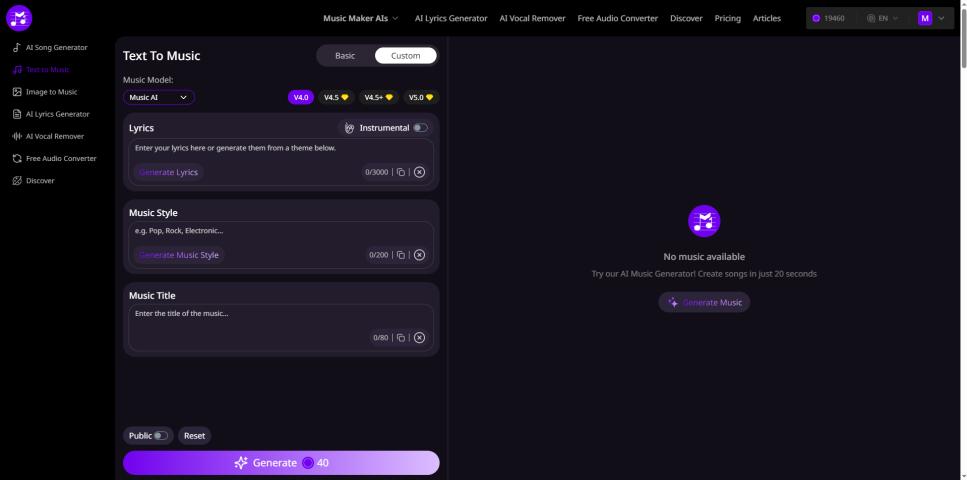

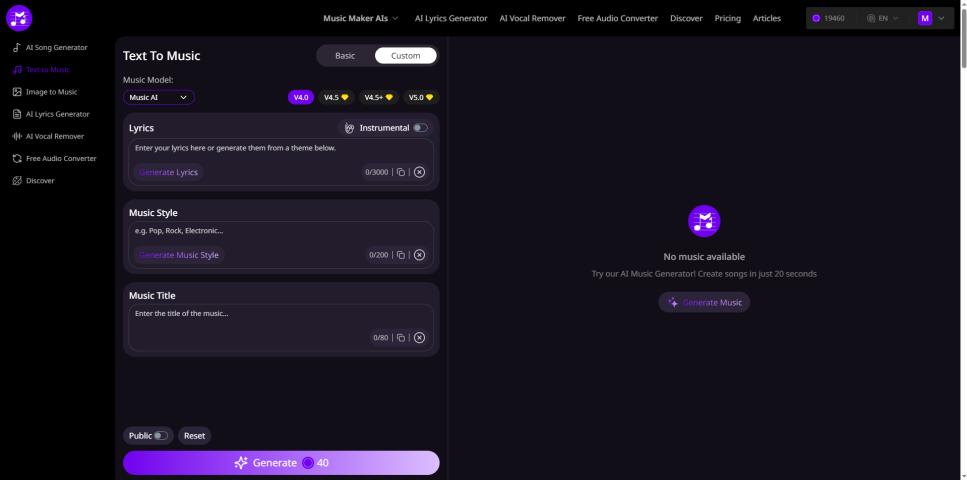

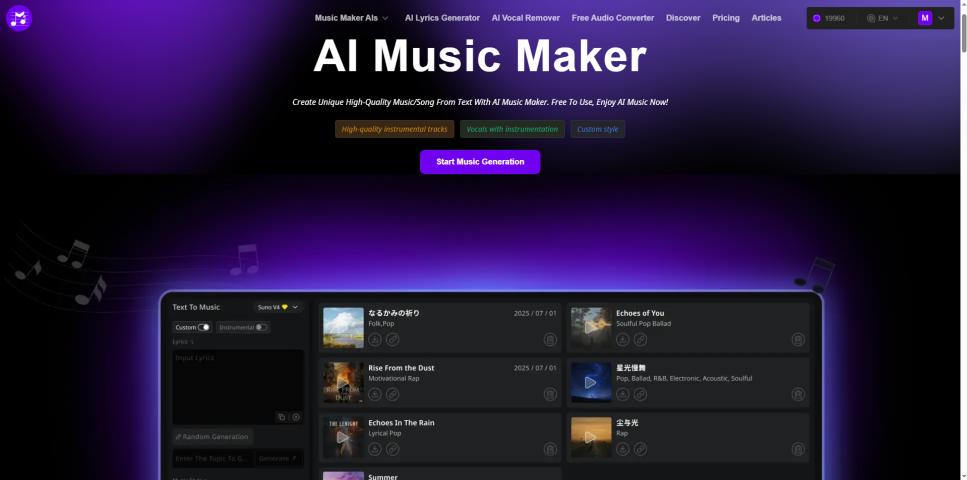

Using ACE-Step 1.5 is straightforward. Start by defining a text prompt — a genre, mood, or even specific lyrics. The model interprets the semantic idea and generates a full musical piece, from melody to rhythm and harmony. Advanced options let you edit lyrics, control >

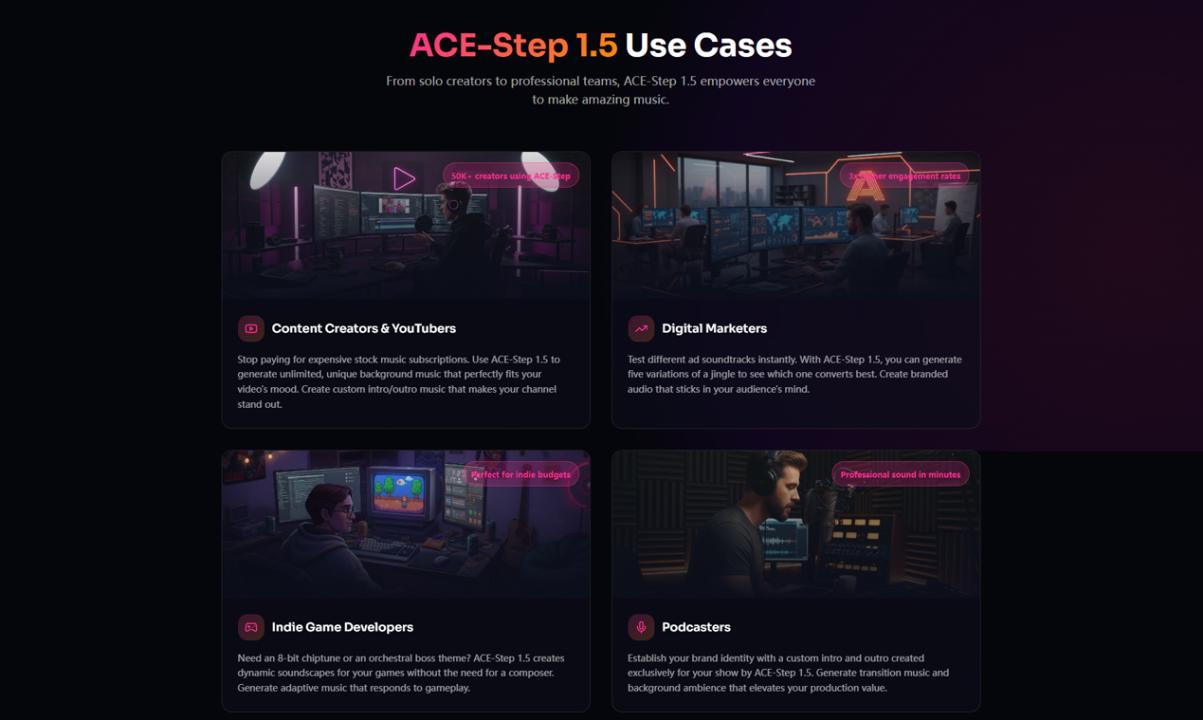

Beyond quick music prototyping, ACE-Step 1.5’s open-source foundation unlocks deeper creative workflows. Developers can integrate the model into apps, experiment with multilingual music generation, and extend capabilities with voice transformation and remix tools. As AI continues to democratize creative production, tools like ACE-Step 1.5 are helping bridge the gap between imagination and finished work — making advanced music generation practical for everyone from indie artists to startup builders.