Producing short cinematic videos usually requires multiple editing tools, complex rendering workflows, and manual audio synchronization. These steps slow down content teams that need rapid visual prototypes, short ads, or social media storytelling at scale.

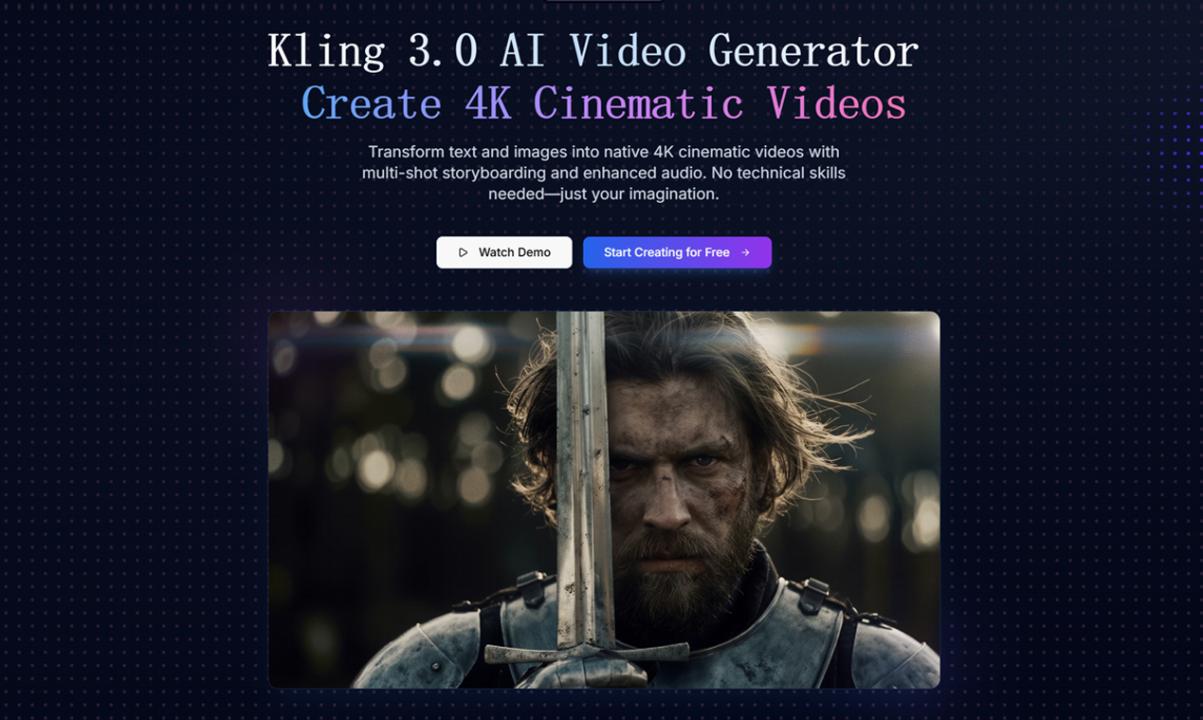

Modern multimodal generation tools simplify this process by combining text-to-video, image-to-video, camera control, and synchronized audio into a single workflow. Platforms such as the Kling 3.0 allow creators to define scenes with prompts, maintain consistent characters across shots, and export high-resolution clips suitable for marketing, education, or concept visualization. Character consistency, native audio-visual sync, and 4K rendering pipelines make it easier to move from idea to usable footage without complex post-production.

A typical workflow involves writing a short scene prompt, optionally uploading reference images for consistent characters, generating multi-shot sequences, and exporting the results for editing or distribution. As AI video models continue improving in motion realism and rendering speed, they are becoming practical tools for rapid content experimentation and small-team production.