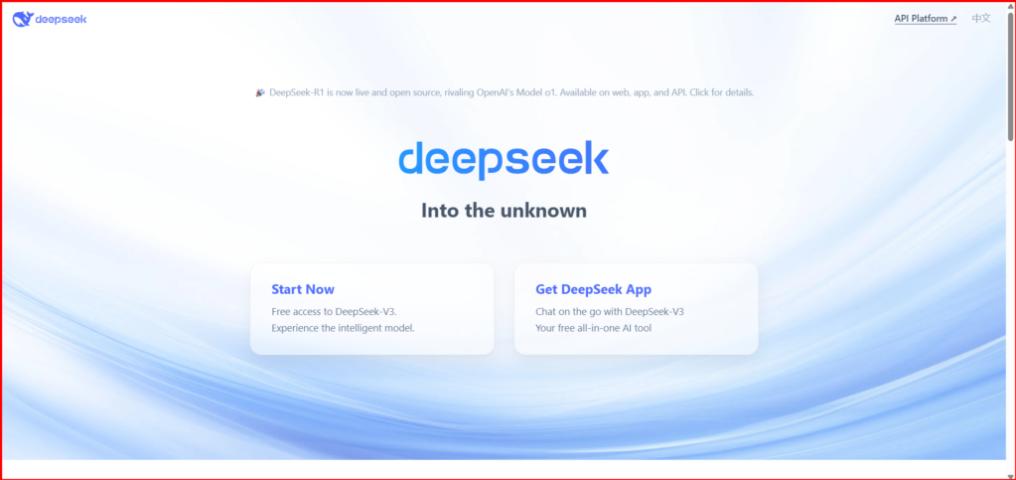

deepseek r1 is an open-source AI reasoning model developed by DeepSeek AI, designed to offer advanced reasoning capabili

| Founded year: | 2025 |

| Country: | United States of America |

| Funding rounds: | Not set |

| Total funding amount: | Not set |

Description

DeepSeek-R1 is a state-of-the-art open-source AI reasoning model created by DeepSeek AI, aimed at empowering developers and researchers with advanced reasoning capabilities. Unlike proprietary models, DeepSeek-R1 is fully open-source under the MIT license, allowing users to freely access, modify, and integrate the model into their applications.The model utilizes a Chain of Thought (CoT) approach to enhance its performance in complex tasks such as coding and mathematical reasoning. With a maximum context length of 64,000 tokens, it can handle extensive inputs, making it suitable for a variety of applications.

The website serves as a comprehensive platform where users can find detailed documentation, API access options, and community resources to facilitate the use of DeepSeek-R1. It is particularly beneficial for developers seeking cost-effective AI solutions that prioritize transparency and community engagement. The pricing structure for API access is competitive, offering a tiered system based on usage, making it an attractive option for those in need of powerful AI tools without the constraints of proprietary software.

deepseek r1 Key Highlights:

🧠 RL-Driven Reasoning: DeepSeek R1 pioneers a unique approach, applying reinforcement learning directly to the base model without prior supervised fine-tuning.

🚀 Powerful Architecture: Features a robust 671B parameter MoE architecture with 37B activated.

🔥 High-Performing Distilled Models: Including a Qwen-32B variant that outperforms OpenAI-o1-mini across various benchmarks, achieving new state-of-the-art results for dense models.

✅ Open Source: DeepSeek has generously open-sourced both the main model and several smaller distilled models.

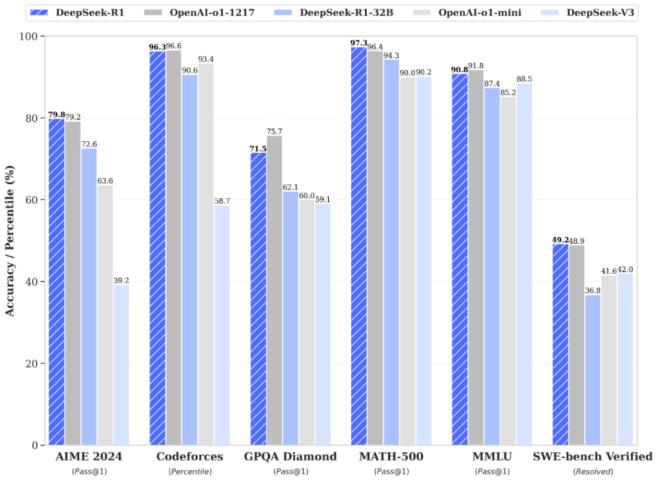

🥇 Superior Performance: Outperforms comparable models on math, code, and reasoning benchmarks.