If you’re a student today, it feels like there’s an AI bot for everything. Type a question into a generic chatbot and you’ll get an answer in seconds. For chemistry homework, that sounds perfect – until you actually try to learn from it.

The problem is simple: most general-purpose AI chatbots were never designed to teach chemistry. They are great at generating fluent text, but much less reliable at handling units, equations, diagrams and multi-step reasoning. The result is a mix of correct-looking answers, hidden mistakes and very little transparency about how those answers were produced.

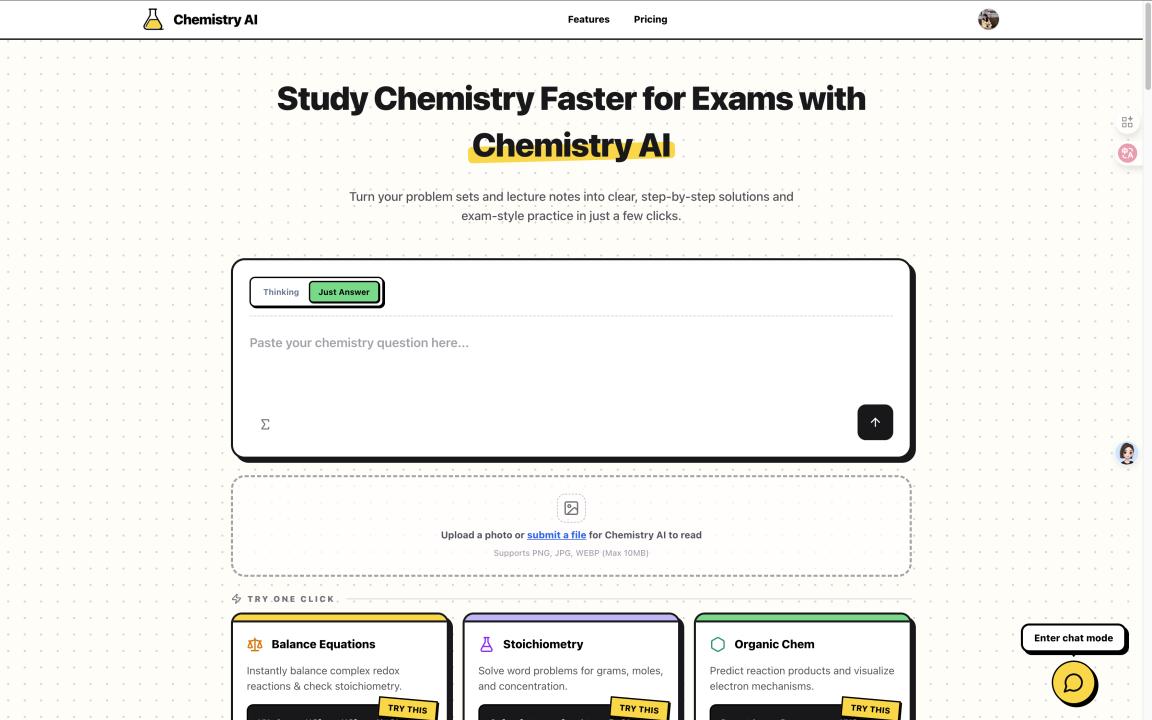

In this article, I want to share what I learned while experimenting with a dedicated chemistry AI solver for students (the one I’m currently building is called Chemistry AI), and why a focused tool can be much more helpful than a generic chatbot.

1. Chemistry is more than text – it’s structure, units and constraints

A normal language model is trained to predict the next word. That’s very different from what a chemistry student actually needs. When you solve a stoichiometry problem or balance a redox equation, you are not just “talking about chemistry” – you are following strict quantitative rules.

Generic chatbots often struggle with:

Moles, mass and volume conversions – they might skip steps or mix up units.

Significant figures and precision – answers look plausible but don’t match what teachers expect.

Chemical equations – sometimes they “balance” equations in a way that violates conservation of mass.

For quick brainstorming this is fine. For graded homework or exam prep, it’s risky. Students either copy the answer without understanding, or waste time debugging where the bot went wrong.

A chemistry-focused solver can be different. It can treat equations, units and constants as first-class citizens, and build guardrails specifically for chemistry instead of plain text.

2. Students don’t just want the answer – they need the reasoning

When I talked to students, almost nobody said “I only want the final number.”

What they really wanted was:

“Show me the steps so I can follow the logic.”

“Highlight which formula you chose and why.”

“Explain where I went wrong in my own attempt.”

Generic chatbots can sometimes produce step-by-step solutions, but the structure is inconsistent. One response is detailed, the next is a wall of text, the third skips half the reasoning. There is no standard template that students can get used to.

In a dedicated chemistry AI solver, the default experience can be:

The problem is broken down into clear numbered steps.

Each step shows the formula, substitution and unit checks.

The final answer is separated from the reasoning, so you can peek at the process without spoiling the result immediately.

The goal is not to be the smartest chem AI in the world. The goal is to be the clearest study partner in your browser.

3. Images matter: many real problems live on paper

Another limitation of generic chatbots is their text-only mindset. Real students don’t live inside plain text. Their questions live in:

photos of worksheets,

screenshots of lecture slides,

hand-written notes from class.

For a chemistry AI solver to be truly useful, it has to understand those photos. That means reliable OCR, diagram reading and enough context to figure out which part of the problem actually matters.

In the solver I’m working on, a student can snap a photo of a worksheet, upload it and get a structured, step-by-step explanation instead of manually re-typing everything. This may sound like a small UX improvement, but it matches how students really work on a daily basis.

4. Learning vs. copying: designing for the right behavior

Any homework helper has a tension at its core:

If it only gives final answers, it becomes a “copy-paste” tool.

If it explains too little, students still don’t understand the underlying concept.

A better approach is to encourage learning first, copying second:

Having a quick “Just Answer” mode for checking a result.

Offering a “Thinking” mode that walks through all the logic in detail.

Designing the interface so that the steps are easy to skim and review, not just to show off that an AI solved it.

I’ve also seen early users use this kind of tool in creative ways:

generating extra practice questions on a topic they feel weak in,

checking their own handwritten solutions step by step,

using it as a “second opinion” when a textbook solution feels confusing.

The more they interact with the reasoning, the more the tool shifts from “answer machine” to “study coach”.

5. Where a dedicated chemistry AI fits in the student’s toolbox

I don’t think a specialised chemistry AI needs to replace all other tools. Students will still use generic chatbots for writing, flashcards and brainstorming. What a focused chem AI can do is fill a very specific gap:

Reliable quantitative reasoning for chemistry problems.

Consistent step-by-step format that matches how teachers grade.

Image support so they don’t have to fight with formatting.

For many students, that’s the missing piece between “I vaguely get this topic” and “I can confidently solve exam->

6. If you’re building or studying, I’d love your feedback

If you’re working on similar tools, or if you’re a student/teacher curious about how a dedicated chemistry AI solver feels in practice, you can check out the prototype I’m building at https://chemistryai.chat/.

I’d love any feedback on what such a tool should do better – whether that’s in my project Chemistry AI or in any other chemistry-focused AI. The more we design these tools around real learning instead of just faster copying, the more useful they’ll be for students.